Ravi Pandya

ML Research Scientist

Nuro

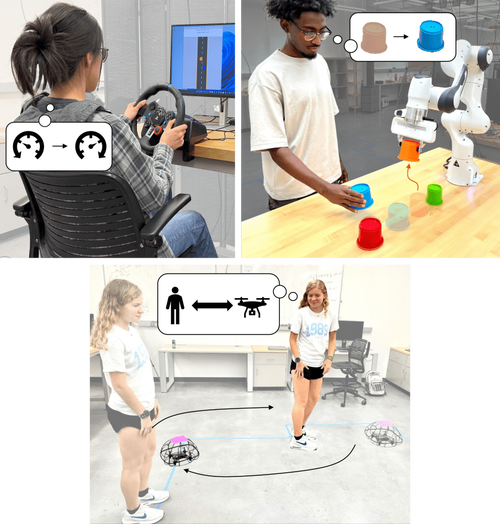

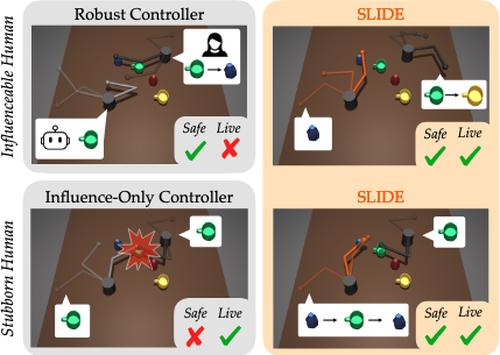

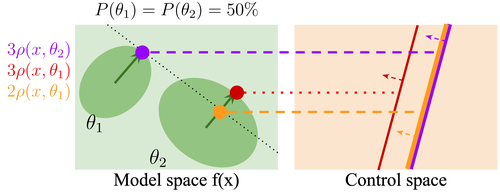

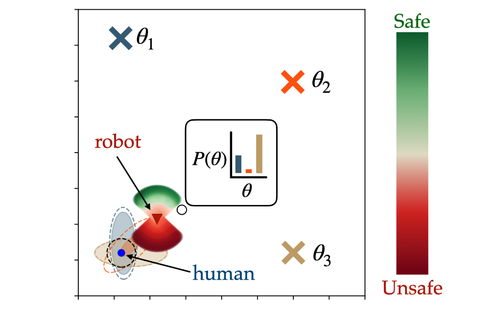

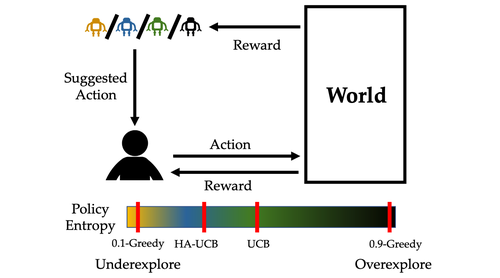

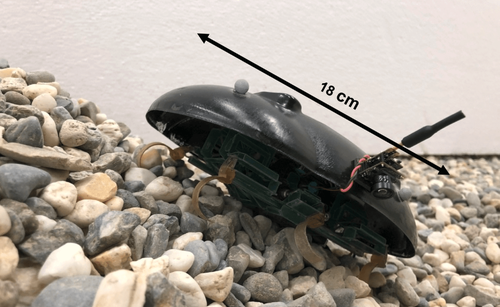

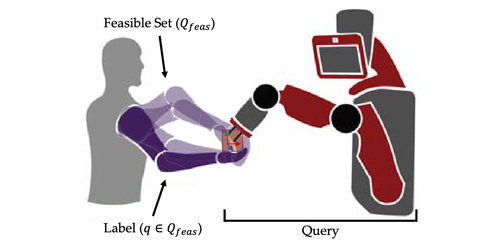

I am a Machine Learning Research Scientist at Nuro working on behavior planning. I recently completed my Ph.D. in the Robotics Institute at Carnegie Mellon University advised by Prof. Changliu Liu and Prof. Andrea Bajcsy. I was funded in part by the NSF Graduate Research Fellowship. My Ph.D. thesis was on using data-driven methods (e.g. reinforcement learning, trajectory forecasting, LLMs) to enable robots to safely interact with humans while accounting for the influence they have on peoples’ actions and intentions. My thesis can be viewed here.

Prior to graduate school, I worked as a Data Scientist at Ericsson where I used ML and multiagent reinforcement learning for optimizing radio networks. I also previously did research in human-robot interaction at UC Berkeley where I primarily worked with Prof. Anca Dragan, but also had the privilege of working with Prof. Ruzena Bajcsy.

Interests

- Machine Learning

- Artificial Intelligence

- Human-Robot Interaction

- Optimal Control

Education

-

PhD in Robotics, 2020 - 2025

Carnegie Mellon University

-

BS in Electrical Engineering and Computer Science, 2015-2019

UC Berkeley